The most real humanoid in the world.

The most real humanoid in the world.

Souce

Souce

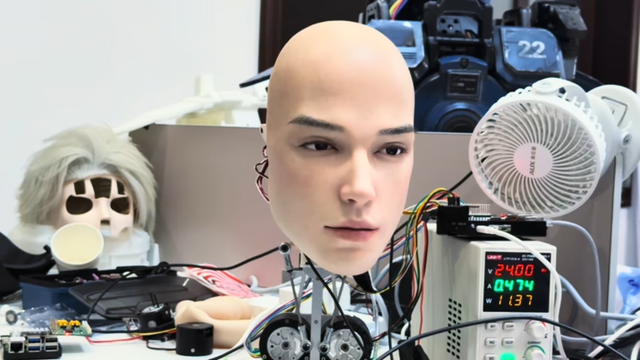

A simple blink may be something banal to us, but when it comes from a machine it can be disturbing, that was precisely the sensation caused by a new video from the Chinese company AheadForm, which presented its humanoid robotic head, capable of reproducing facial expressions with surprising realism.

At the heart of this innovation is the combination of self-supervised artificial intelligence algorithms and high-precision bionic actuators that reproduce subtle movements of the human face, every blink, every muscle contraction and every sideways glance were designed to simulate the complexity of human expressions.

The most intriguing moment, however, comes at the climax of the demonstration, when the robotic head not only emits human gestures, but conveys the sensation of thinking and reacting authentically. The gaze that follows the environment, the blinking that appears at the right moment and the lip synchronization with speech reinforce the illusion of presence, as if we were facing a hybrid being between human and machine.