Circuit Tracer - Anthropic's open tools to see how AI thinks

Circuit Tracer

Anthropic's open tools to see how AI thinks

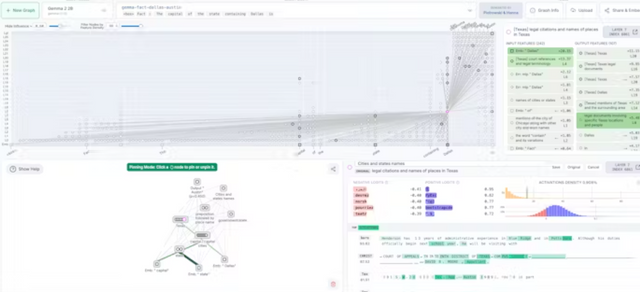

Screenshots

Hunter's comment

Anthropic's open-source Circuit Tracer helps researchers understand LLMs by visualizing internal computations as attribution graphs. Explore on Neuronpedia or use the library. Aims for AI transparency.

We often hear about how large language models are like "black boxes," and understanding how they arrive at their outputs is a huge challenge. Anthropic's new open-source Circuit Tracer tools offer a fascinating step towards peeling back those layers.

Rather than focusing on building bigger models, this initiative is about developing better tools to see inside the ones we currently use. Researchers and enthusiasts can now generate and explore attribution graphs – which essentially map out parts of a model's internal decision-making process for given prompts on models like Llama 3.2 and Gemma-2. You can even interact by modifying internal features to observe how outputs change.

As AIs get more capable, genuinely understanding their internal reasoning, how they plan, or even when they mi

Link

https://www.producthunt.com/products/circuit-tracer

This is posted on Steemhunt - A place where you can dig products and earn STEEM.

View on Steemhunt.com

Upvoted! Thank you for supporting witness @jswit.

Congratulations!

We have upvoted your post for your contribution within our community.

Thanks again and look forward to seeing your next hunt!

Want to chat? Join us on: